Kevin Doran

Machine learning. Investigating animal vision in the Tom Baden Lab (PhD). Previously, R&D engineer at Weta Digital. Email: firstname at this domain

Categorical distributions are effective neural network outputs for event prediction

The sub-field of machine learning known as temporal point processes, which deals with predicting event times, has continued to emphasize benchmarking against smaller datasets, or datasets with little unsable information. Larger models with less regurlarzation have been overlooked as a result. We observed this when creating models for spike prediction. We wrote this paper in order to characterize the datasets being used and to show the effectiveness of a simple model when datasets are larger and contain more information about the underlying event generating process. [arxiv page] [arxiv pdf]

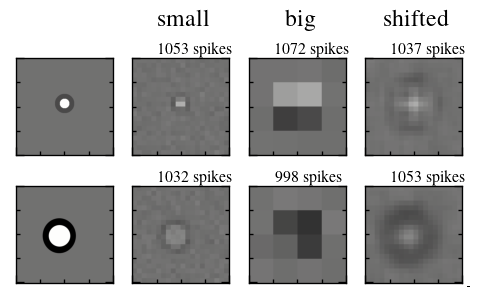

On shifted noise for 2D receptive field estimation

An empirical test of shifted noise for estimating 2D receptive fields. Results support the idea that shifted noise cannot be thought of as a drop-in replacement for vanilla checkerboard noise. more

Four ways (two bad, two good) of calculating 2D receptive fields from spike snippets

Collecting a window of stimulus data around every spike in a recording of neurons is a common analysis step in neuroscience. The mean of these snippets is termed the spike-triggered average (STA). If you want to collapse this down to 2D, there are a few options. I've seen people use the variance of the snippets, which I think is not a good approach. A better approach is to rephrase the problem in terms of likelihood. more

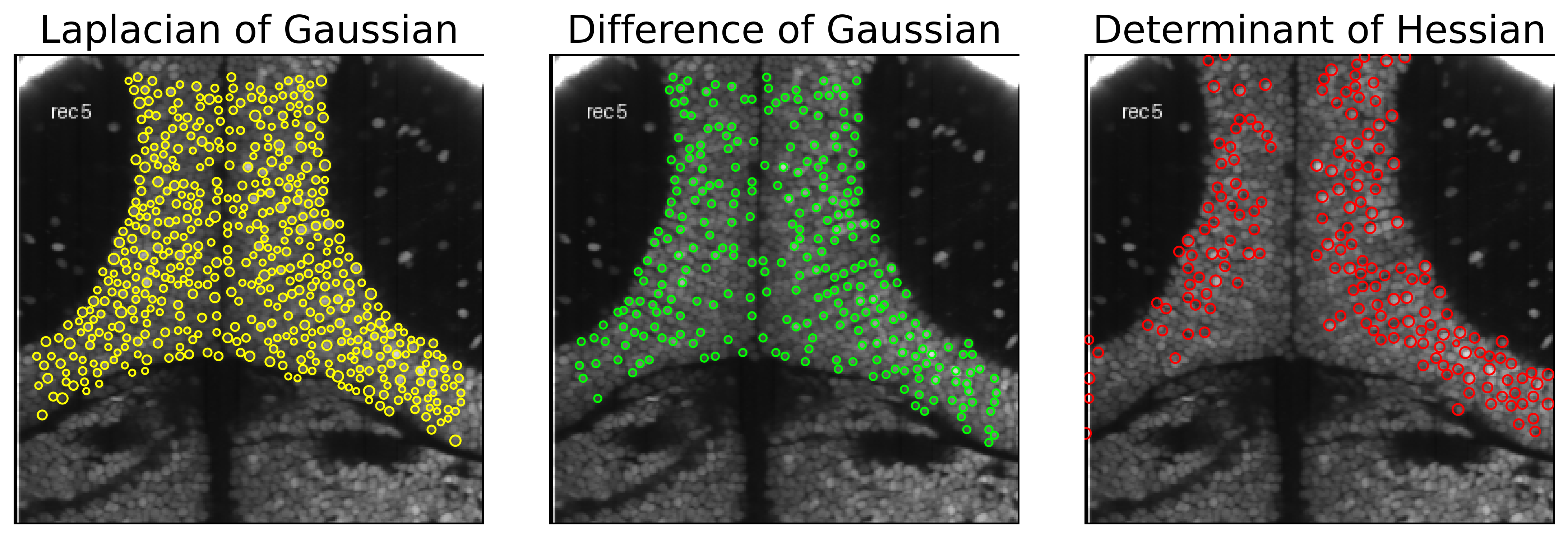

Frame registration and cell detection for 2-photon recordings

I worked on a project that involved carrying out 2-photon recording of the tectum of a zebrafish larva. For a single larva, we recorded 14 recordings, each 15 minutes long followed by an approximately 2-minute gap. The larva moved around, especially at the beginning, and to extract cell traces, we first needed a way to align frames and detect cells within and across recordings. more

Robust learning rate finder with Kalman smoothing

Kalman smoothing can be applied to the learning rate range test to produce smooth learning rate curves from which a learning rate can be chosen. more

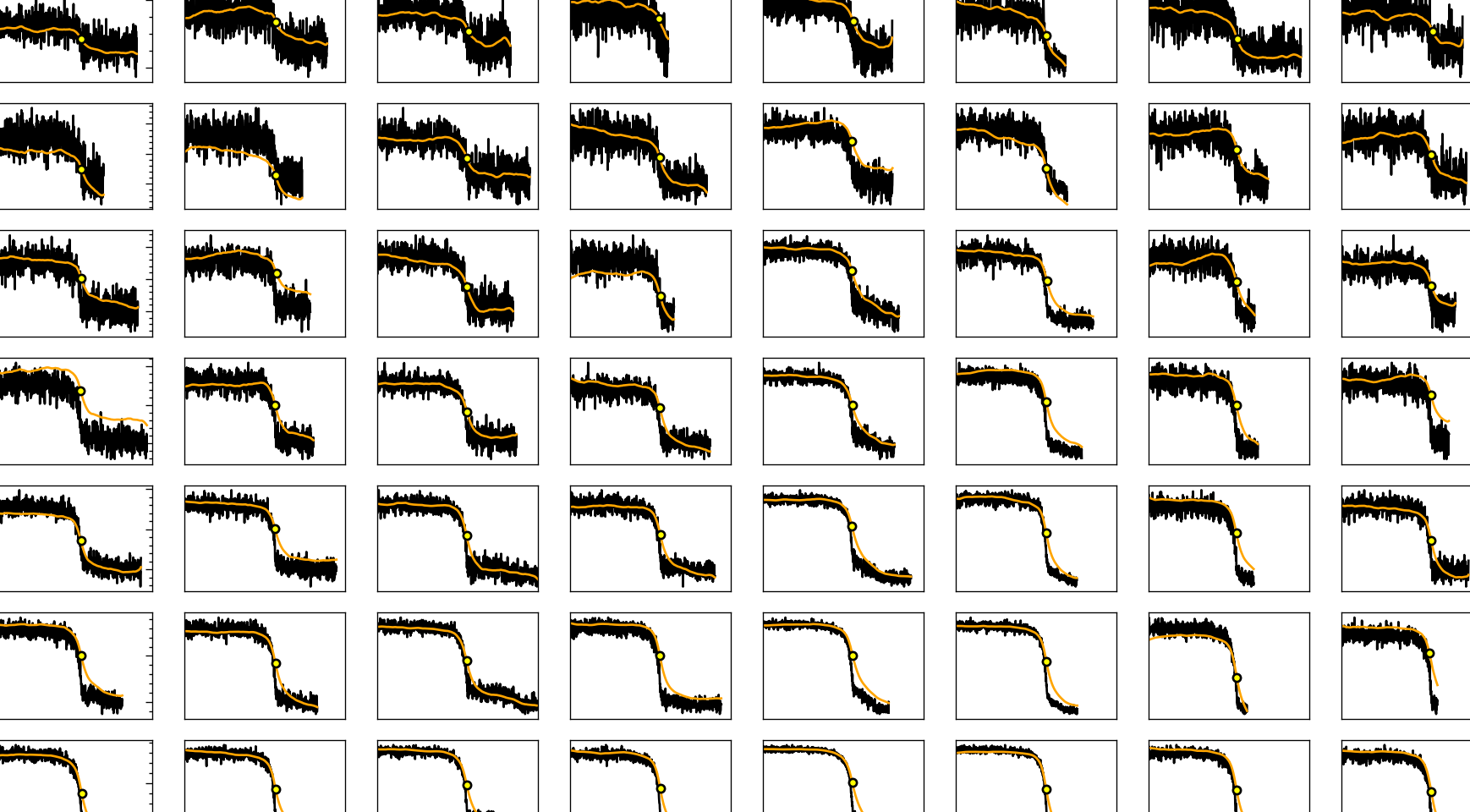

Spike distance function as a learning objective for spike prediction

A learning objective that embraces the nature of spikes (or any other events): discrete points in continuous time. This learning objective allows models to predict the timing of spikes with both high and low temporal precision. For comparison, predictions when using the Poisson learning objective are also shown above. [paper page] [paper pdf]

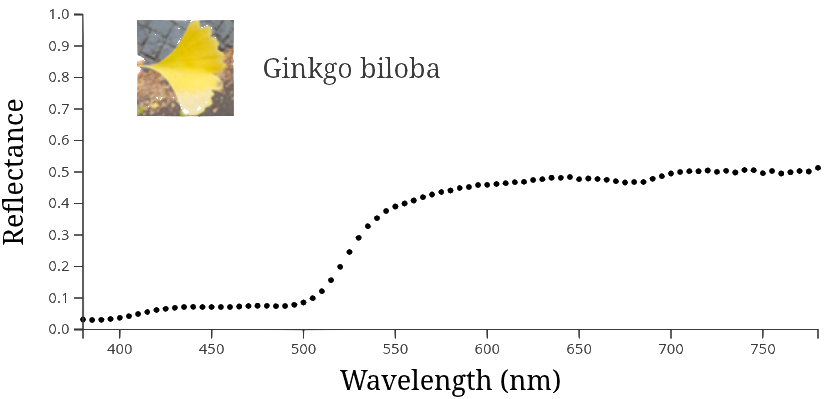

Neural networks and color

This is a project to see if anything can be learnt by drawing parallels between human color perception and neural networks trained for vision tasks. more

Motivating ELBO from importance sampling

The evidence lower bound expression appears naturally when you try to sample the posterior distribution with an approximate distribution. I think this way of arriving at the evidence lower bound is more intuitive and reveals more about why concessions are being made. more

Inside neural network training

Some videos showing how weights, activations and gradients change at every step as a network is trained. more

Anki notes

I've exported some of my Anki decks. Most of the content is on the answer side of each card. If you aren't using Anki, I invite you to read Michael Nielsen's argument for it.

2021's Summer of Math Exposition

The following are some posts made for 3Blue1Brown's Summer of Math Exposition. They are attempts to make clear and memorable math explainers for a wide audience.